How To Find The Least Squares Line

With Machine Learning and Artificial Intelligence booming the Information technology marketplace it has become essential to learn the fundamentals of these trending technologies. This web log on Least Squares Regression Method volition help you understand the math behind Regression Analysis and how it can be implemented using Python.

To arrive-depth knowledge of Artificial Intelligence and Machine Learning, yous tin can enroll for live Machine Learning Engineer Master Plan by Edureka with 24/vii support and lifetime access.

Here's a listing of topics that will be covered in this weblog:

- What Is the Least Squares Method?

- Line Of Best Fit

- Steps to Compute the Line Of All-time Fit

- The least-squares regression method with an instance

- A brusque python script to implement Linear Regression

What is the Least Squares Regression Method?

The least-squares regression method is a technique commonly used in Regression Analysis. Information technology is a mathematical method used to find the best fit line that represents the relationship between an independent and dependent variable.

To empathise the to the lowest degree-squares regression method lets go familiar with the concepts involved in formulating the line of best fit.

What is the Line Of All-time Fit?

Line of best fit is drawn to represent the human relationship betwixt 2 or more variables. To be more specific, the all-time fit line is drawn beyond a scatter plot of data points in order to represent a relationship between those data points.

Regression analysis makes utilise of mathematical methods such as least squares to obtain a definite human relationship between the predictor variable (s) and the target variable. The least-squares method is one of the most effective ways used to draw the line of best fit. It is based on the thought that the square of the errors obtained must be minimized to the almost possible extent and hence the name least squares method.

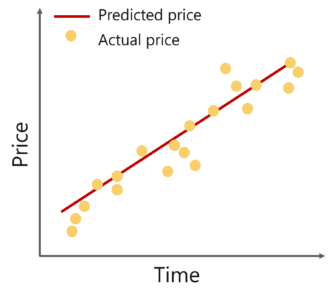

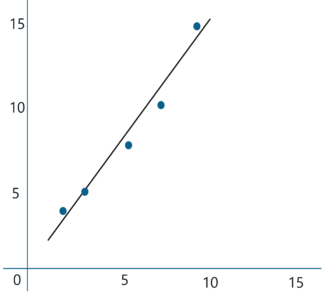

If we were to plot the best fit line that shows the depicts the sales of a company over a period of time, it would expect something like this:

Notice that the line is equally shut as possible to all the scattered data points. This is what an ideal best fit line looks like.

To better empathize the whole process let's see how to summate the line using the To the lowest degree Squares Regression.

Steps to calculate the Line of Best Fit

To start constructing the line that all-time depicts the human relationship between variables in the data, we first need to get our basics correct. Take a look at the equation below:

![]()

Surely, you've come across this equation before. Information technology is a unproblematic equation that represents a straight line along 2 Dimensional data, i.e. x-axis and y-centrality. To better understand this, let's interruption down the equation:

- y: dependent variable

- thousand: the gradient of the line

- 10: independent variable

- c: y-intercept

So the aim is to summate the values of slope, y-intercept and substitute the respective 'ten' values in the equation in social club to derive the value of the dependent variable.

Allow's see how this tin can be done.

As an supposition, allow's consider that in that location are 'northward' information points.

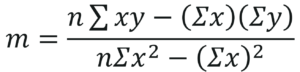

Step 1: Calculate the slope 'k' by using the post-obit formula:

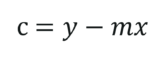

Stride ii: Compute the y-intercept (the value of y at the signal where the line crosses the y-axis):

Step 3: Substitute the values in the final equation:

![]()

Simple, isn't information technology?

Now let'southward look at an example and see how you tin use the least-squares regression method to compute the line of best fit.

To the lowest degree Squares Regression Case

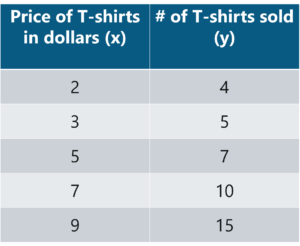

Consider an example. Tom who is the owner of a retail shop, institute the cost of different T-shirts vs the number of T-shirts sold at his shop over a catamenia of one week.

He tabulated this like shown below:

Let united states use the concept of least squares regression to notice the line of best fit for the above data.

Step 1: Calculate the slope 'g' past using the following formula:

After you substitute the corresponding values, m = 1.518 approximately.

Step two: Compute the y-intercept value

After you substitute the corresponding values, c = 0.305 approximately.

Step 3: Substitute the values in the last equation

![]()

Once yous substitute the values, it should look something similar this:

Let's construct a graph that represents the y=mx + c line of best fit:

Now Tom tin can utilise the above equation to guess how many T-shirts of price $8 tin can he sell at the retail shop.

y = 1.518 x 8 + 0.305 = 12.45 T-shirts

This comes down to 13 T-shirts! That'south how simple it is to make predictions using Linear Regression.

Now let'southward attempt to empathize based on what factors can nosotros ostend that the in a higher place line is the line of best fit.

The least squares regression method works past minimizing the sum of the square of the errors as small as possible, hence the name to the lowest degree squares. Basically the distance between the line of best fit and the error must be minimized every bit much as possible. This is the bones idea behind the least squares regression method.

A few things to keep in mind before implementing the least squares regression method is:

- The information must exist costless of outliers because they might lead to a biased and wrongful line of all-time fit.

- The line of all-time fit can be drawn iteratively until you get a line with the minimum possible squares of errors.

- This method works well even with non-linear data.

- Technically, the difference between the actual value of 'y' and the predicted value of 'y' is called the Residual (denotes the error).

Now permit's wrap up by looking at a practical implementation of linear regression using Python.

Least Squares Regression In Python

In this section, we volition exist running a unproblematic demo to understand the working of Regression Assay using the least squares regression method. A short disclaimer, I'll be using Python for this demo, if you're not familiar with the linguistic communication, you tin go through the following blogs:

- Python Tutorial – A Complete Guide to Learn Python Programming

- Python Programming Language – Headstart With Python Basics

- A Beginners Guide To Python Functions

- Python for Information Scientific discipline

Problem Statement: To use Linear Regression and build a model that studies the relationship betwixt the head size and the brain weight of an individual.

Data Set Description: The data set up contains the post-obit variables:

- Gender: Male or female represented as binary variables

- Age: Age of an individual

- Caput size in cm^iii: An individuals head size in cm^three

- Brain weight in grams: The weight of an individual'south encephalon measured in grams

These variables need to be analyzed in gild to build a model that studies the relationship between the head size and brain weight of an individual.

Logic: To implement Linear Regression in club to build a model that studies the relationship between an contained and dependent variable. The model will exist evaluated past using to the lowest degree square regression method where RMSE and R-squared volition exist the model evaluation parameters.

Let'due south get started!

Step 1: Import the required libraries

import numpy as np import pandas equally pd import matplotlib.pyplot equally plt

Step 2: Import the data set up

# Reading Data data = pd.read_csv('C:UsersNeelTempDesktopheadbrain.csv') print(information.shape) (237, 4) print(data.caput()) Gender Historic period Range Head Size(cm^3) Brain Weight(grams) 0 1 one 4512 1530 i 1 1 3738 1297 ii one 1 4261 1335 3 1 i 3777 1282 iv 1 i 4177 1590

Step iii: Assigning 'X' as independent variable and 'Y' every bit dependent variable

# Coomputing X and Y X = data['Head Size(cm^iii)'].values Y = data['Brain Weight(grams)'].values

Side by side, in order to calculate the slope and y-intercept we beginning need to compute the means of 'x' and 'y'. This can be done as shown beneath:

# Hateful Ten and Y mean_x = np.hateful(10) mean_y = np.hateful(Y) # Total number of values northward = len(Ten)

Pace 4: Summate the values of the slope and y-intercept

# Using the formula to calculate 'm' and 'c' numer = 0 denom = 0 for i in range(n): numer += (X[i] - mean_x) * (Y[i] - mean_y) denom += (X[i] - mean_x) ** ii 1000 = numer / denom c = mean_y - (m * mean_x) # Printing coefficients print("Coefficients") print(thousand, c) Coefficients 0.26342933948939945 325.57342104944223 The higher up coefficients are our slope and intercept values respectively. On substituting the values in the final equation, nosotros go:

Brain Weight = 325.573421049 + 0.263429339489 * Head Size

As simple as that, the above equation represents our linear model.

At present permit's plot this graphically.

Stride 5: Plotting the line of best fit

# Plotting Values and Regression Line max_x = np.max(Ten) + 100 min_x = np.min(X) - 100 # Calculating line values x and y x = np.linspace(min_x, max_x, k) y = c + k * x # Ploting Line plt.plot(x, y, colour='#58b970', label='Regression Line') # Ploting Besprinkle Points plt.besprinkle(X, Y, c='#ef5423', label='Scatter Plot') plt.xlabel('Head Size in cm3') plt.ylabel('Brain Weight in grams') plt.legend() plt.show()

Pace half-dozen: Model Evaluation

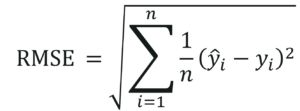

The model built is quite practiced given the fact that our information set is of a modest size. It'south time to evaluate the model and see how proficient it is for the final stage i.e., prediction. To do that we will utilize the Root Mean Squared Error method that basically calculates the least-squares error and takes a root of the summed values.

Mathematically speaking, Root Hateful Squared Error is nothing merely the square root of the sum of all errors divided by the full number of values. This is the formula to calculate RMSE:

In the higher up equation, is the i thursday predicted output value. Let's encounter how this tin can be done using Python.

# Calculating Root Mean Squares Error rmse = 0 for i in range(n): y_pred = c + m * Ten[i] rmse += (Y[i] - y_pred) ** 2 rmse = np.sqrt(rmse/n) impress("RMSE") print(rmse) RMSE 72.1206213783709 Another model evaluation parameter is the statistical method called, R-squared value that measures how close the information are to the fitted line of best

fit.

Mathematically, it tin exist calculated as:

- is the total sum of squares

- is the total sum of squares of residuals

The value of R-squared ranges betwixt 0 and one. A negative value denoted that the model is weak and the prediction thus made are wrong and biased. In such situations, information technology'south essential that you analyze all the predictor variables and wait for a variable that has a loftier correlation with the output. This step unremarkably falls under EDA or Exploratory Data Assay.

Let'south not get carried away. Here's how you implement the computation of R-squared in Python:

# Calculating R2 Score ss_tot = 0 ss_res = 0 for i in range(n): y_pred = c + m * Ten[i] ss_tot += (Y[i] - mean_y) ** 2 ss_res += (Y[i] - y_pred) ** 2 r2 = ane - (ss_res/ss_tot) print("R2 Score") print(r2) R2 Score 0.6393117199570003 As yous tin see our R-squared value is quite shut to one, this denotes that our model is doing good and can be used for further predictions.

So that was the entire implementation of Least Squares Regression method using Python. Now that y'all know the math behind Regression Analysis, I'm sure you're curious to learn more. Here are a few blogs to go you started:

- A Complete Guide To Maths And Statistics For Data Science

- All You Need To Know About Statistics And Probability

- Introduction To Markov Bondage With Examples – Markov Chains With Python

- How To Implement Bayesian Networks In Python? – Bayesian Networks Explained With Examples

With this, we come up to the stop of this blog. If you lot have any queries regarding this topic, please go out a comment below and we'll get back to y'all.

If y'all wish to enroll for a complete course on Artificial Intelligence and Automobile Learning, Edureka has a peculiarly curated Car Learning Engineer Master Programme that will make you expert in techniques like Supervised Learning, Unsupervised Learning, and Natural Language Processing. It includes training on the latest advancements and technical approaches in Artificial Intelligence & Motorcar Learning such as Deep Learning, Graphical Models and Reinforcement Learning.

Source: https://www.edureka.co/blog/least-square-regression/

Posted by: knightwoust1984.blogspot.com

0 Response to "How To Find The Least Squares Line"

Post a Comment